NVIDIA® Tesla® V100 is the world’s most advanced data center GPU ever built to accelerate AI, HPC, and Graphics.

NVIDIA® Tesla® V100 with 16 GB HBM2 memory is the world’s most advanced data center GPU ever built to accelerate AI, HPC, and graphics. Powered by NVIDIA Volta™, the latest GPU architecture, Tesla V100 offers the performance of up to 100 CPUs in a single GPU—enabling data scientists, researchers, and engineers to tackle challenges that were once thought impossible.

Tesla V100 is the flagship product of Tesla data center computing platform for deep learning, HPC, and graphics. The Tesla platform accelerates over 450 HPC applications and every major deep learning framework. It is available everywhere from desktops to servers to cloud services, delivering both dramatic performance gains and cost savings opportunities.

PNY provides unsurpassed service and commitment to its professional graphics customers offering: 3 year warranty, pre- and post-sales support, dedicated Quadro Field Application engineers and direct tech support hot lines.

| MEMORY SIZE | 16 GB CoWoS HBM2 with ECC | |

| MEMORY BUS WIDTH | 4096-bit | |

| MEMORY BANDWIDTH | 900 GB/s | |

| CUDA CORES | 5120 | |

| PEAK DOUBLE-PRECISION FLOATING POINT PERFORMANCE |

7 Tflops (GPU Boost Clocks) | |

| PEAK SINGLE PRECISION FLOATING POINT PERFORMANCE |

14 Tflops (GPU Boost Clocks) | |

| TENSOR PERFORMANCE | 112 Tflops (GPU Boost Clocks) | |

| SYSTEM INTERFACE | PCI Express 3.0 x16 | |

| MAX POWER CONSUMPTION | 250 W | |

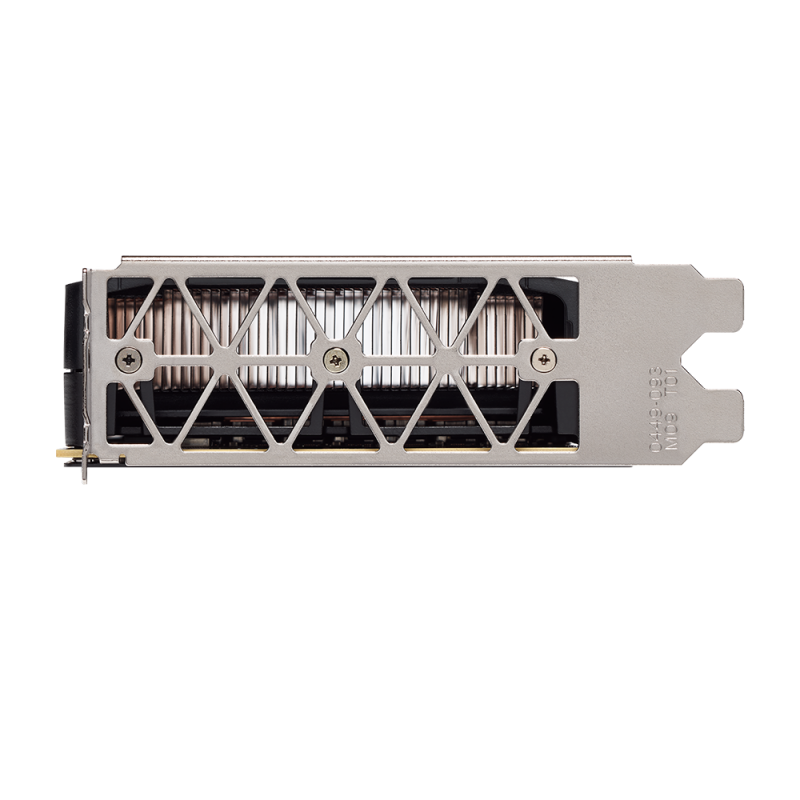

| THERMAL SOLUTION | passive Heatsink | |

| FORM FACTOR | 111,15 mm (H) x 267,7 mm (L) Dual Slot, Full Height | |

| DISPLAY CONECTORS | None | |

| POWER CONNECTORS | 8-pin CPU power connector | |

| WEIGHT (W/O EXTENDER) | 1196g | |

| PACKAGE CONTENT | 1x Power adapter (2 x PCIe 8-pit auf single CPU 8-pin) | |

| PART NUMBER AND EAN | TCSV100MPCIE-PB EAN: 3536403359041 TCSV100M-32GB-PB EAN: 3526403363710 |

|